Techniques of Reconstruction

In SPECT reconstruction the gamma camera has to be rotated around the patient and more images have to be taken in several positions using the step-and-shoot technique. This technique means that the images are taken when the camera has stopped moving. It is also possible to acquire data in list mode, in which the gamma camera moves continuously and does not stop in the measuring points.

Due to the low sensitivity (~0.01%) of the collimators more (typically 2-4) gamma cameras are used simultaneously, thus taking a 360-degree image (in cardiac studies a 180-degree image is taken by two heads placed in an ‘L’ shape). The orbit can be circular or body-contouring. In the latter case there are infrared sensors in front of the collimator which control the movement. This way a better resolution can be achieved, since the camera can be positioned as close to the body as possible, thus reducing the distance of the collimator and the source.

The planar images taken by the gamma camera at different angles form a so-called series of projections of the three-dimensional distribution of the radioactive source (see the left part of the image below). This series can be rearranged into a sinogram by regarding the axial slices of the two-dimensional images as the series belonging to a given angle (as shown on the right side of the image below). This way the sinogram series will contain as many elements as many pixel rows there are in the planar projections. A sinogram can be constructed by selecting a given axial slice from each two-dimensional image and organizing them by angle. With this method we practically produce the two-dimensional Radon transform of the three-dimensional distribution in more slices. Using the Radon transform, the original spatial source distribution can be reconstructed by applying inverse Radon transformation. Two aspects of the reconstruction process can be considered, since there is an analytical and an iterative realization of the inverse Radon transformation as well.

The analytical reconstruction is based on the Fourier slice theorem (central slice theorem) which states that the one-dimensional Fourier transform of the Radon transform with respect to t is the same as the two-dimensional Fourier transform of the original distribution (as shown in the image below).

This means that the distribution being examined can be reconstructed by an inverse Fourier transform. However, the Fourier transform of the projections does not fill up the two-dimensional Fourier space evenly – the sample density decreases when moving away from the centre, which means that interpolation cannot be avoided if we want to apply DFT.

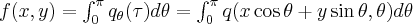

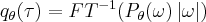

The reconstruction process described above is realized by filtered backprojection on an f(x,y) two-dimensional Radon transform, if the real spatial distribution is q(x,y):

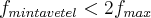

The question arises as to how large the matrix should be in which the images are collected, i.e. how many slices of the given volume are needed. The sampling theorem presents a basis for this, which states that a sampled signal can be recovered if the signal is bandlimited, i.e. a maximal frequency exists (Nyquist frequency) and there are no components with greater frequencies, and the maximum frequency component is sampled at least twice.

, where  is the Nyquist frequency and P is the pixel size. Because of this the Ramp filter can be modified during the filtered backprojection by cutting at the Nyquist frequency. This way the excessive amplification of noise can be avoided. The maximum frequency can be expressed by the pixel size, i.e. the question of the size of the matrix is related to the proper choice of pixel size.

is the Nyquist frequency and P is the pixel size. Because of this the Ramp filter can be modified during the filtered backprojection by cutting at the Nyquist frequency. This way the excessive amplification of noise can be avoided. The maximum frequency can be expressed by the pixel size, i.e. the question of the size of the matrix is related to the proper choice of pixel size.

The pixel size can be given by the size of the field of view (FOV), the number of the sampling points (N) and the size of the zoom factor (Z).

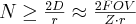

In this case the diameter (D) of the object to be imaged determines the number of the sampling points through the sampling theorem, as shown in the figure below:

where r is the intrinsic resolution of the system (in parallel collimators this value is 8-12 mm).

Comparing the formulae we find that the following is true for the pixel size:

which is typically 3-6-mm. This means that in the ~40 cm field of view used in human SPECTs a 64x64 or a 128x128 matrix is the right choice. In cardiac examinations the diameter of the field of view is only 20-25 cm, so in that case a smaller matrix is sufficient.

In accordance with the concepts described above, sampling density can also be given using the sampling theorem:

azaz

azaz

The choice of the size of the matrix is affected by the fact that in order to achieve a given image statistics, four times as long time of measurement is needed for a two times as large accumulation matrix. This is due to the fact that Poisson noise occurs in the image because the collected events are independent and the variance is proportional to the number of counts per detector pixel.

The presence of noise is critical because of the reconstruction process, since the filtered backprojection amplifies the high-frequency components – i.e. the noise – in the image. A solution of this can be the application of a low-pass filter, i.e. the further modification of the Ramp filter so that the high-frequency components will not be amplified that much. Well-known solutions include the application of the Shepp-Logan, the Hanning and the Butterworth filters. However, low-pass filtering blurs the image and the edges, it performs smoothing, thus it deteriorates the resolution. Therefore, instead of optimizing the filters, a reconstruction algorithm which handles noise well can give better results. Such an algorithm is the ML-EM (Maximum Likelihood – Expectation Maximization) method, an iterative inverse Radon transformer.

The advantage of the ML-EM reconstruction is that it uses what we already know, namely that the image statistics follows a Poisson distribution. Furthermore, theoretically the entire physics of the imaging system can be built in the elements of the system matrix, which give the probability that a gamma photon being formed in a given voxel will arrive in exactly the p detector element. In a case when the system matrix is calculated from geometry only we get a rare matrix, but if the standard deviation is also considered, this will not be the case. In two-dimensional reconstruction the elements of the matrix can be calculated in advance, but in three-dimensional reconstruction on-the-fly calculation is needed, since the size of the whole matrix would fall in the order of Tbyte.

The result of the ML-EM reconstruction is usually more smooth and free from artefacts (the left side of the above picture shows a filtered backprojection combined with low-pass filtering, the right side shows the result of an ML-EM reconstruction without filtering) as expected; however, it has a slow convergence (100-500 iterations are necessary) and it has high computational requirements (in a three-dimensional case, in case of a CPU cluster or GPU it gives an image in less than 1 hour). When considering the number of iterations, the fact that the convergence diverges due to the inaccurate system matrix model and the noise needs to be taken into account, which means that the errors in reconstruction start to increase above a certain iteration number. That is why an efficient stopping rule or regularization (e.g. based on total variance (TV)) is needed. In the latter case a penalty function is used which punishes the outlier voxels but keeps the edges. The disadvantage of this is that it may produce artefacts in the image.