Non-statistical iterative reconstructions

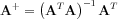

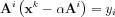

With the previously introduced notations, the fundamental equation of the image reconstruction reads:

Do not forget that the A matrix is a sparse matrix, not sqare and the number of its elements may be around 1012.

A Moore-Penrose generalized/psuedoinverse

As the matrix A is not square, most of the common methods for solving the equality (pl. Gauss-Siedel iteration) cannot directly be applied. Either when our system is overdetermined or underdetermined the criteria for the solution can be stated as:

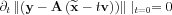

where ||.|| is a norm of our choice. Let us assume that  minimizes the above expression and let us choose the scalar product for the norm. Now for an arbitrary

minimizes the above expression and let us choose the scalar product for the norm. Now for an arbitrary

vector and a t real number it must be true that

vector and a t real number it must be true that

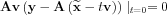

Calculating the derivative

thus

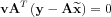

This must be true for every v so

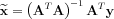

The marix ATA is a square matrix now,and if it is invertible:

The A+ Moore-Penrose psudoinverse:

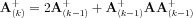

We can use for the calculationof A+ an UV decomposition, or any other regular method, can be calcuated by a simple though not too efficient iteration (Ben-Israel and Cohen iteration):

A Kaczmarz iteration

The system response matrix of currently available PET, SPECT and CT scanners is large compared to the easily accessable computer hardware, or/and computations on sparse matrices are not very efficient. A simple, stable and fast algorithm is the Kaczmarz iteration. The underlying idea is that a nxm matrix A is actually a

n number of equation of mdimensional hyperplanes and their crossing point is the x solution. If the system is overdetermined and some noise is added, perhaps the intersection point will not be really a point. If it is underdetermined it is possible that we can narrow down the solution only to a certain domain.

The essence of the Kaczmarz-iteráció is that we are trying to tend towards the domain of the solution by projecting perpendicularly to the hyperplane given as a single line of the matrix our previous x guess for a solution.

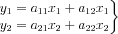

For example let us take a 2x2-es A matrix with elements aii.

Now the following two-equations system is given:

where the crossing point is our solution. We have sketched on the graph the first 4 points of the iterations.

In this 2D example it can be readily seen that

- if the lines were perpendicular to each other i.e. the lines of the matrix were linearly indipendent, we would get the exact solution in two steps

- if the lines were parallel, i.e. the set of eqations were contraversial the iteration would oscillate between the two lines

- if the two lines were inclined only very narrowly, i.e. the rows were much correlated with each other the iteration would converge very slowly. The more independent they are the faster the method converges

A serious advantage of the Kaczmarz iteration that we only use a single line of the matrix for an iteration step, we do not need to handle the whole matrix together.

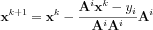

Now let us look at the steps of the projection now for a general case. Index i nominates which line of the matrix is being used, which row vector comes to play.

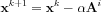

Let xk be teh solution obtained in the k-th step. Inthe k+1.

iteration step we should project this point on the hyperplane determined by equation i.The equation of this hyperplane reads:

Earlier we hav seen, that such an equation describes a plane perpendicular to the direction vector  , with distance from the origin of

, with distance from the origin of  . So, when in the kth step

. So, when in the kth step

we would like to project our solution to the i-th hyperplane, we need to go in the direction of  , according to the convention of distance

, according to the convention of distance  .For simpler formulas let us include in this expression the normalisation of the unit vector, so now we can write the for next point:

.For simpler formulas let us include in this expression the normalisation of the unit vector, so now we can write the for next point:

The next point must be on the i. next hyperplane, therefore:

Ebből kifejezhető

The solution in the k+1. iteration steps therefore reads:

The Kaczmarz-iteration in the medical diagnostic literature is mentioned also as ART (Algebraic Reconstruction Technique). The method is simple, but when applied only advanced versions are used. It is interesting that the Kaczmarz-iteration can be seen as an extremely low subset version of the OSEM iteration scheme.

Our next chapter would extend the matrix equation to probalistic interpretation of the elements and the measure of the distance.

The original document is available at http://549552.cz968.group/tiki-index.php?page=Non-statistical+iterative+reconstructions