Single rate, pile-up, dead time and random rate

One of the advantages of PET is that it is quantitative, so the pixel values of the greyscale image are – theoretically – proportional to the activity there. Of course, every detection has dead time, so the higher the activity is, the less efficient the detection is. In order to be able to correct the losses occurring due to dead time, we need to know the amount of dead-time loss. The first step is to estimate the number of events ‘piling up’ on each other at a fixed count rate, meaning that the events arrive so fast that the electronics cannot separate the two signals.

Pileup

If one or more photons arrive in a detector in rapid succession, the signals they generate pile up on each other. This phenomenon is known as pileup. The time of arrival and the energy of the individual events cannot be determined, or can only be determined with difficulty and with dubious accuracy from the sum of the two signals. Let us examine the probability of the pileup.

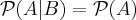

We examine a detector. The number of photons arriving in and producing a flash in the detector per unit of time is called single rate. Since the decays are independent of each other, the probability of pileup equals the probability of a chosen event being piled-up. Let us suppose that the event occurred at the ‘0’ moment. The question is the probability of at least another event coming in within the next interval of base width (signal width) ‘t’. Since the events are independent, this is equal to the probability of an event arriving in the 0,t interval (let it be denoted by A). Let B denote the fact that an event arrived in the 0 moment, thus

due to the independence of events. (Similarly, the result of a die roll is not affected by the previous results.) Therefore, it is sufficient to calculate  .

.

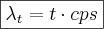

The parameter of the Poisson distribution,  , the expected count rate per unit of time, can be rewritten as the product of the rate unit of time and the length of the time window. (This is necessary because the rates are given in cps, but in this case their natural unit of time is signal length (?) instead of seconds.) That is, if we know that

, the expected count rate per unit of time, can be rewritten as the product of the rate unit of time and the length of the time window. (This is necessary because the rates are given in cps, but in this case their natural unit of time is signal length (?) instead of seconds.) That is, if we know that  equals

equals  for an interval of 1 second, then the calculations must be performed using a parameter of

for an interval of 1 second, then the calculations must be performed using a parameter of  for an interval with base length t. The signal length is about 200 ns and the half life of the isotopes is about 9-11 orders of magnitude higher, so the rate can be considered constant during the length of the signal. Therefore, the probability that an event arrives in an interval of one base length (let it be denoted by

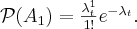

for an interval with base length t. The signal length is about 200 ns and the half life of the isotopes is about 9-11 orders of magnitude higher, so the rate can be considered constant during the length of the signal. Therefore, the probability that an event arrives in an interval of one base length (let it be denoted by  ) is:

) is:

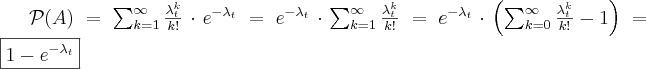

If we calculate with at least one event, we need to add up the probabilities of the arrival of one event, two events, three events, etc.

The pileup probability calculated in this way is depicted in Figure 1 as a function of the detected single rate for base widths of 140 ns, 200 ns and 300 ns. If we have a look at the formula above, we will see that the count rate and the base width appears in it through  , and pileup probability is determined by the product

, and pileup probability is determined by the product  , the product of the count rate and the signal length. This establishes the requirement for the electronics that a short signal is needed, so preamplifiers need to be designed that do not stretch the signal while amplifying the PMT signals. Usually the higher the amplification is, the smaller bandwidth the amplifier has, i.e. the more we lose the high-frequency components; the signal is getting longer. That is why it is advantageous if the amplitude of the signal of the photodetector is sufficiently high at the beginning (PMT, SiPM) and it is not necessary to amplify it much (APD).

, the product of the count rate and the signal length. This establishes the requirement for the electronics that a short signal is needed, so preamplifiers need to be designed that do not stretch the signal while amplifying the PMT signals. Usually the higher the amplification is, the smaller bandwidth the amplifier has, i.e. the more we lose the high-frequency components; the signal is getting longer. That is why it is advantageous if the amplitude of the signal of the photodetector is sufficiently high at the beginning (PMT, SiPM) and it is not necessary to amplify it much (APD).

Dead time

Let us give a rough outline of what happens after detecting an event.

- Every detected event generates a trigger signal (in the discriminator); as a result, the process chain is started (integration of the sampled values, calculation of the Anger position, etc.)

- If two events pile up on each other, the integral and the time of arrival of both will be inaccurate.

- The sampled data of the incoming events are processed by some sort of target hardware (usually FPGA). It requires some time and calculations cannot be started before the integration of the signals.

- For now we discuss a single detector; coincidence selection is done after detection. From the point of view of dead time every detector is independent, thus whether it can detect an event or whether it discards it due to dead time does not depend on what happens in the other detectors.

Let us have the following model of event selection strategy: if two events arrive so close to each other that they cannot be separated, let us discard both of them.

Let the event length be  .

.

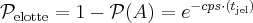

What is the probability of the ‘survival’ of an event under such conditions? Note that in this model an event is kept only if no events have arrived within the time interval  preceding the arrival of the event, which would mean that it has to be discarded, and no events come in the time window after the arrival of the event, either. What is the probability of no events arriving in an interval

preceding the arrival of the event, which would mean that it has to be discarded, and no events come in the time window after the arrival of the event, either. What is the probability of no events arriving in an interval  ? The complementary event of this is that at least one event arrives. Thus:

? The complementary event of this is that at least one event arrives. Thus:

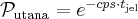

Similarly, the probability of no events arriving in the interval  following the arrival of the event, which would mean that the event has to be discarded:

following the arrival of the event, which would mean that the event has to be discarded:

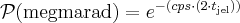

The event can only be kept if both conditions are met and the events are independent:

This is the probability with which every event is kept in the detector due to the independence of the events, so the rate which remains because of the dead time is:

Usually the  is measured and the real number of arriving photons has to be calculated from this. In actual measurements event pairs are detected because of the coincidence, but dead time describes one detector only. Fortunately, a coincidence event is kept only if both ‘halves’ of it – as single events – are kept. Therefore, it has to be examined which two detectors each LOR connects and the product of the single losses of the detectors has to be taken into consideration during correction.

is measured and the real number of arriving photons has to be calculated from this. In actual measurements event pairs are detected because of the coincidence, but dead time describes one detector only. Fortunately, a coincidence event is kept only if both ‘halves’ of it – as single events – are kept. Therefore, it has to be examined which two detectors each LOR connects and the product of the single losses of the detectors has to be taken into consideration during correction.

Of course the value of the parameters in the formula of the rate that remains after dead-time losses can vary in the case of other selection strategies, but in most cases it can be written as  with the introduction of some sort of effective dead time

with the introduction of some sort of effective dead time  . Formally, the expression is like the pileup, but

. Formally, the expression is like the pileup, but  is affected by not only the length of the signal, but by the dead time occurring due to calculations and by the strategy.

is affected by not only the length of the signal, but by the dead time occurring due to calculations and by the strategy.

Random coincidence

Random coincidence occurs when two events are detected simultaneously (within a time window) that do not originate from the same decay. The probability of this can be determined by the so-called shifted time window estimate.

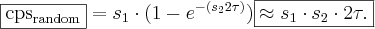

Let the width of the coincidence window be  .

.

Let us imagine that an event arrives in a detector that can be characterized by a single rate  and we would like to know the number of events arriving in another detector characterized by a single rate

and we would like to know the number of events arriving in another detector characterized by a single rate  , but not at the time of arrival of the first event, but

, but not at the time of arrival of the first event, but  time later, in an interval of

time later, in an interval of ![[t_{\mathrm{eltol}}-\tau,t_{\mathrm{eltol}}+\tau]](lib/equation/pictures/467dc61bd138f2b657c5e5d93f9bff5a.png) .

.

If  is significantly larger than the temporal resolution (e.g.

is significantly larger than the temporal resolution (e.g.  ), then it is almost certain that these events did not originate from the same decay as the chosen event. (It would only be possible after multiple scattering, but it is very unlikely.) The probability that at least one event arrives within a given time window, with a given single rate

), then it is almost certain that these events did not originate from the same decay as the chosen event. (It would only be possible after multiple scattering, but it is very unlikely.) The probability that at least one event arrives within a given time window, with a given single rate  is expressed by the formula \eqref{pile}. During the deduction of the \eqref{pile} formula the independence of events is used. Thinking in shifted time windows this still holds, but it would not remain for the real coincidences in the coincidence window around zero. This time

is expressed by the formula \eqref{pile}. During the deduction of the \eqref{pile} formula the independence of events is used. Thinking in shifted time windows this still holds, but it would not remain for the real coincidences in the coincidence window around zero. This time  needs to be used as the length of the examined time interval instead of the base length previously used. The time window is much smaller than the signal length previously used in the \eqref{pile} formula, thus the value of

needs to be used as the length of the examined time interval instead of the base length previously used. The time window is much smaller than the signal length previously used in the \eqref{pile} formula, thus the value of  is low, therefore the probability of multiple random coincidences is also low (the higher and higher powers of

is low, therefore the probability of multiple random coincidences is also low (the higher and higher powers of  ), this way a simple expression is obtained.

), this way a simple expression is obtained.

Keeping only the \eqref{egyszeres} member corresponding to the one-fold coincidence in the \eqref{pile} Taylor series:

Comments

- Neglecting the multiple random coincidences could not only be done because of the order of magnitude of the time window, but also because most coincidence selectors automatically discard multiple coincidences. (It is not possible to tell that out of the potential LORs connecting the events which are false.)

- If the activity is higher in the field of view, then the single rates of the detectors increase in proportion to that (until the effect of the dead time is not significant). This means that the rate of the random events increases quadratically with activity.

- The single rates appear in the number of random events. If the activity occurs outside the field of view, it should not be seen in the coincidence, but it increases the single rate, thus increases the number of random events as well. This is of high importance in human PET, because the diameter of the field of view is 15-20 cm; most of the human body is outside it and the injected activity spreads in the whole body. Depending on the time window, it is possible that 80% of the detected events are random coincidences.

- The time window, and thus the number of random events can be decreased with a good temporal resolution.

- The deduction used the shifted time window through independence. We assumed independence when deducting \eqref{pile}, but it would not be true in the unshifted window.

- Most devices can send the events measured in the shifted time window, or at least their rate; in this case estimates do not have to be based on the single rates.

NEC (Noise Equivalent Count rate) curve

We saw that by increasing the activity, the efficiency of detection deteriorates because of the dead time. The other similar effect is that the number of random coincidences increases. Theoretically, measuring with higher activity the statistics of the measurement gets better, but this is not the case because of the losses.

It would be useful to estimate the sensible amount of activity. A standard procedure for measuring this is recorded in the NEMA (National Electrical Manufacturers Association![]() ). This consists of the following: measurements have to be performed for a long time using a standard specimen which was designed to ensure that scattered and random events occur during the tests. The activity of the specimen (phantom), which initially contained high activity, falls; the number of random events can be neglected at low activity, since it depends approximately quadratically on activity. The ratio of scattered events presumably does not change, thus the rate of the random and the scattered events can be determined. In the end we try to characterize the useful count rate with the rate

). This consists of the following: measurements have to be performed for a long time using a standard specimen which was designed to ensure that scattered and random events occur during the tests. The activity of the specimen (phantom), which initially contained high activity, falls; the number of random events can be neglected at low activity, since it depends approximately quadratically on activity. The ratio of scattered events presumably does not change, thus the rate of the random and the scattered events can be determined. In the end we try to characterize the useful count rate with the rate

It is getting more and more obvious that NEC is not a particularly useful metric. Although it does provide an upper limit, but it does not reveal anything about image quality, since it is not characterized by the count rate in itself. In many devices the image quality deteriorates well below the peak of the NEC curve. It is expected that during the next revision of the standard (in years) a more precise estimation procedure will be introduced.

The original document is available at http://549552.cz968.group/tiki-index.php?page=Single+rate%2C+pile-up%2C+dead+time+and+random+rate